Tool Calling

Enable models new capabilities and data access so they can follow instructions and respond to prompts.

Tool Calling (also known as Function Calling) is a powerful and flexible way for LLMs to interface with external systems and access data beyond their training corpus. This guide shows how to connect models to data and operations provided by your application. We will demonstrate how to use tool calling, which can handle free-form text inputs and outputs.

How it works

Let’s first align on a few key terms related to tool calling. Once we share a common vocabulary, we’ll walk through practical examples to implement it.

1. Tools — Capabilities we make available to the model

Tools are the capabilities we expose to the model. When the model generates a response to a prompt, it may decide it needs data or functionality provided by a tool in order to follow the prompt’s instructions.

You can give the model access to tools such as:

- Get today’s weather for a location

- Fetch account details for a given user ID

- Issue a refund for a lost order

Or any other operation you want the model to be aware of or able to perform when responding to prompts.

When we make an API request to the model with a prompt, we can include a list of tools the model may consider using. For example, if we want the model to answer questions about the current weather somewhere in the world, we might give it access to a get_weather tool that accepts location as a parameter.

2. Tool Call — The model’s request to use a tool

A function call or tool call refers to a special kind of response we receive from the model when, after inspecting the prompt, it determines that to follow the prompt’s instructions it needs to invoke one of the tools we provided.

If the model receives a prompt like “What’s the weather in Paris?” in the API request, it can respond with a tool call to the get_weather tool with Paris as the location parameter.

3. Tool Call Output — The output we generate for the model

Function call output or tool call output refers to the response generated by the tool using the inputs from the model’s tool call. The tool call output can be structured JSON or plain text, and it should include a reference to the specific model tool call (referenced later via tool_call_id in the examples).

Completing our weather example:

- The model has access to a get_weather tool that accepts location as a parameter.

- In response to a prompt like “What’s the weather in Paris?”, the model returns a tool call with location set to Paris.

- Our tool call output could be a JSON structure like {"temperature": "25", "unit": "C"}, indicating the current temperature is 25 degrees.

We then send all tool definitions, the original prompt, the model’s tool call, and the tool call output back to the model, and receive a final text response like:

It’s 25°C in Paris today.4. Function tools vs. Tools

- A function is a specific type of tool defined by a JSON Schema. Function definitions allow the model to pass data to your application, where your code can access data or execute actions suggested by the model.

- In addition to function tools, there are custom tools that can handle free-form text input and output.

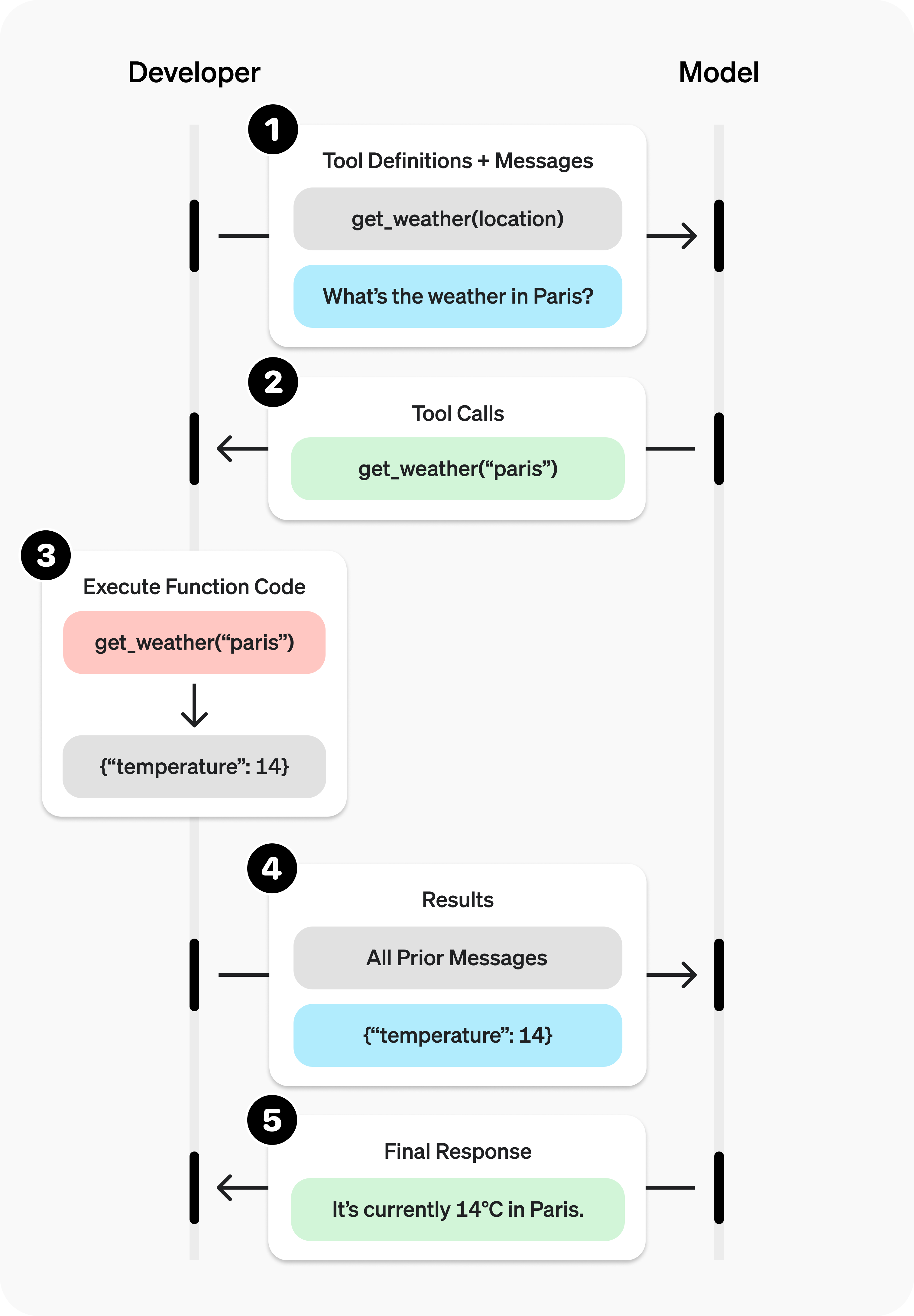

Tool calling flow

Tool calling is a multi-turn conversation between your application and the model via the ZenMux API. The flow has five major steps:

- Make a request to the model that includes tools it can call

- Receive the tool call(s) from the model

- Execute code on your application side using the tool call inputs

- Make a second request to the model including the tool outputs

- Receive the final response from the model (or more tool calls)

Tool calling example

Let’s walk through a complete tool calling flow using get_horoscope to fetch a daily horoscope for a zodiac sign.

A complete tool calling example:

from openai import OpenAI

import json

client = OpenAI(

base_url="https://zenmux.ai/api/v1",

api_key="<ZENMUX_API_KEY>",

)

# 1. Define the list of callable tools for the model

tools = [

{

"type": "function",

"function": {

"name": "get_horoscope",

"description": "Get today's horoscope for a zodiac sign.",

"parameters": {

"type": "object",

"properties": {

"sign": {

"type": "string",

"description": "Zodiac sign name, e.g., Taurus or Aquarius",

},

},

"required": ["sign"],

},

},

},

]

# Create a message list that we'll append to over time

input_list = [

{"role": "user", "content": "What's my horoscope? I'm an Aquarius."}

]

# 2. Prompt the model with the defined tools

response = client.chat.completions.create(

model="moonshotai/kimi-k2",

tools=tools,

messages=input_list,

)

# Save the function call outputs for a subsequent request

function_call = None

function_call_arguments = None

input_list.append({

"role": "assistant",

"content": response.choices[0].message.content,

"tool_calls": [tool_call.model_dump() for tool_call in response.choices[0].message.tool_calls] if response.choices[0].message.tool_calls else None,

})

for item in response.choices[0].message.tool_calls:

if item.type == "function":

function_call = item

function_call_arguments = json.loads(item.function.arguments)

def get_horoscope(sign):

return f"{sign}: You will meet a baby otter next Tuesday."

# 3. Execute the function logic for get_horoscope

result = {"horoscope": get_horoscope(function_call_arguments["sign"])}

# 4. Provide the function call result back to the model

input_list.append({

"role": "tool",

"tool_call_id": function_call.id,

"name": function_call.function.name,

"content": json.dumps(result),

})

print("Final input:")

print(json.dumps(input_list, indent=2, ensure_ascii=False))

response = client.chat.completions.create(

model="moonshotai/kimi-k2",

tools=tools,

messages=input_list,

)

# 5. The model should now be able to respond!

print("Final output:")

print(response.model_dump_json(indent=2))

print("\n" + response.choices[0].message.content)import OpenAI from "openai";

const openai = new OpenAI({

baseURL: 'https://zenmux.ai/api/v1',

apiKey: '<ZENMUX_API_KEY>',

});

// 1. Define the list of callable tools for the model

const tools: OpenAI.Chat.Completions.ChatCompletionTool[] = [

{

type: "function",

function: {

name: "get_horoscope",

description: "Get today's horoscope for a zodiac sign.",

parameters: {

type: "object",

properties: {

sign: {

type: "string",

description: "Zodiac sign name, e.g., Taurus or Aquarius",

},

},

required: ["sign"],

},

},

},

];

// Create a message list that we'll append to over time

let input: OpenAI.Chat.Completions.ChatCompletionMessageParam[] = [

{ role: "user", content: "What's my horoscope? I'm an Aquarius." },

];

async function main() {

// 2. Use a model with tool-calling capabilities

let response = await openai.chat.completions.create({

model: "moonshotai/kimi-k2",

tools,

messages: input,

});

// Save the function call outputs for a subsequent request

let functionCall: OpenAI.Chat.Completions.ChatCompletionMessageFunctionToolCall | undefined;

let functionCallArguments: Record<string, string> | undefined;

input = input.concat(response.choices.map((c) => c.message));

response.choices.forEach((item) => {

if (item.message.tool_calls && item.message.tool_calls.length > 0) {

functionCall = item.message.tool_calls[0] as OpenAI.Chat.Completions.ChatCompletionMessageFunctionToolCall;

functionCallArguments = JSON.parse(functionCall.function.arguments) as Record<string, string>;

}

});

// 3. Execute the function logic for get_horoscope

function getHoroscope(sign: string) {

return sign + ": You will meet a baby otter next Tuesday.";

}

if (!functionCall || !functionCallArguments) {

throw new Error("The model did not return a function call");

}

const result = { horoscope: getHoroscope(functionCallArguments.sign) };

// 4. Provide the function call result back to the model

input.push({

role: 'tool',

tool_call_id: functionCall.id,

// @ts-expect-error must have name

name: functionCall.function.name,

content: JSON.stringify(result),

});

console.log("Final input:");

console.log(JSON.stringify(input, null, 2));

response = await openai.chat.completions.create({

model: "moonshotai/kimi-k2",

tools,

messages: input,

});

// 5. The model should now be able to respond!

console.log("Final output:");

console.log(JSON.stringify(response.choices.map(v => v.message), null, 2));

}

main();WARNING

Note: For reasoning models like GPT-5 or o4-mini, in the final output call you should pass both the tool call content returned by the model and the tool call outputs back to the LLM for summary generation.

Defining function tools

Function tools can be set via the tools parameter. A function tool is defined by its schema, which tells the model what it does and what input parameters it expects. A function tool definition has the following properties:

| Field | Description |

|---|---|

| type | Should always be function |

| function | Tool object |

| function.name | Function name (e.g., get_weather) |

| function.description | Details on when and how to use the function |

| function.parameters | JSON Schema defining the function’s input parameters |

| function.strict | Whether to enable strict schema adherence when generating function calls |

Here is the definition of the get_weather function tool:

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Retrieve the current weather for a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City and country, e.g., Bogotá, Colombia"

},

"units": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "Unit for the returned temperature."

}

},

"required": ["location", "units"],

"additionalProperties": false

},

"strict": true

}

}Token usage

Under the hood, tools count against the model’s context window and are billed as prompt tokens. If you run into token limits, we recommend limiting the size and number of tools.

Handling tool calls

When the model calls one of your tools, you must execute it and return the results. Because tool calls may include zero, one, or many calls, best practice is to assume multiple calls.

The response’s tool_calls array contains function calls (with type set to function). Each call includes the following fields:

- id: A unique identifier used when submitting the function result later

- type: The tool’s type, typically function or custom

- function: Function object

- name: The function name

- arguments: JSON-encoded function arguments

Example response with multiple tool calls:

[

{

"id": "fc_12345xyz",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Paris, France\"}"

}

},

{

"id": "fc_67890abc",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Bogotá, Colombia\"}"

}

},

{

"id": "fc_99999def",

"type": "function",

"function": {

"name": "send_email",

"arguments": "{\"to\":\"[email protected]\",\"body\":\"Hi bob\"}"

}

}

]Execute tool calls and attach results

for choice in response.choices:

for tool_call in choice.message.tool_calls or []:

if tool_call.type != "function":

continue

name = tool_call.function.name

args = json.loads(tool_call.function.arguments)

result = call_function(name, args)

input_list.append({

"role": "tool",

"name": name,

"tool_call_id": tool_call.id,

"content": str(result)

})for (const choice of response.choices) {

for (const toolCall of choice.tool_calls) {

if (toolCall.type !== "function") {

continue;

}

const name = toolCall.function.name;

const args = JSON.parse(toolCall.function.arguments);

const result = callFunction(name, args);

input.push({

role: "tool",

name: name,

tool_call_id: toolCall.id,

content: result.toString()

});

}

}In the example above, we have a hypothetical callFunction that routes each call. Here’s a possible implementation:

Execute function calls and attach results

def call_function(name, args):

if name == "get_weather":

return get_weather(**args)

if name == "send_email":

return send_email(**args)const callFunction = async (name: string, args: unknown) => {

if (name === "get_weather") {

return getWeather(args.latitude, args.longitude);

}

if (name === "send_email") {

return sendEmail(args.to, args.body);

}

};Formatting results

Results must be strings; the contents of the string are up to you (JSON, error codes, plain text, etc.). The model will interpret the string as needed.

If your tool call has no return value (e.g., send_email), simply return a string to indicate success or failure (e.g., "success").

Merging results into the response

After appending results to your input, you can send them back to the model for a final response.

Send the results back to the model

response = client.chat.completions.create(

model="moonshotai/kimi-k2",

messages=input_messages,

tools=tools,

)const response = await openai.chat.completions.create({

model: "moonshotai/kimi-k2",

messages: input,

tools,

});Final response

"It's about 15°C in Paris and about 18°C in Bogotá. I’ve sent that email to Bob."Additional configuration

Controlling tool invocation behavior (tool_choice)

By default, the model decides when and how many tools to call. You can control tool invocation using the tool_choice parameter.

- Auto (default): Call zero, one, or multiple tool calls. tool_choice: "auto"

- Required: Call one or more tools. tool_choice: "required"

Allowed tools (allowed_tools)

If you want the model to only use a subset of the tool list in a request without modifying the tools list you pass in (to maximize prompt caching), you can configure allowed_tools.

"tool_choice": {

"type": "allowed_tools",

"mode": "auto",

"tools": [

{ "type": "function", "function": { "name": "get_weather" } },

{ "type": "function", "function": { "name": "get_time" } }

]

}You can also set tool_choice to "none" to force the model to call no tools.

Streaming

Streaming tool calls work similarly to streaming regular responses: set stream to true and you’ll receive a stream of event objects.

Streaming tool calls:

from openai import OpenAI

client = OpenAI(

base_url="https://zenmux.ai/api/v1",

api_key="<ZENMUX_API_KEY>",

)

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get the current temperature for a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City and country, e.g., Bogotá, Colombia"

}

},

"required": [

"location"

],

"additionalProperties": False

}

}

}]

stream = client.chat.completions.create(

model="moonshotai/kimi-k2",

messages=[{"role": "user", "content": "How's the weather in Paris today?"}],

tools=tools,

stream=True

)

for event in stream:

print(event.choices[0].delta.model_dump_json())import { OpenAI } from "openai";

const openai = new OpenAI({

baseURL: 'https://zenmux.ai/api/v1',

apiKey: '<ZENMUX_API_KEY>',

});

const tools: OpenAI.Chat.Completions.ChatCompletionTool[] = [{

type: "function",

function: {

name: "get_weather",

description: "Get the current temperature (Celsius) for the provided coordinates.",

parameters: {

type: "object",

properties: {

latitude: { type: "number" },

longitude: { type: "number" }

},

required: ["latitude", "longitude"],

additionalProperties: false

},

strict: true,

},

}];

async function main() {

const stream = await openai.chat.completions.create({

model: "moonshotai/kimi-k2",

messages: [{ role: "user", content: "How's the weather in Paris today?" }],

tools,

stream: true,

});

for await (const event of stream) {

console.log(JSON.stringify(event.choices[0].delta));

}

}

main()Output events

{"content":"I need","role":"assistant"}

{"content":"the coordinates of Paris","role":"assistant"}

{"content":" to","role":"assistant"}

{"content":" fetch","role":"assistant"}

{"content":" the","role":"assistant"}

{"content":" weather","role":"assistant"}

{"content":" information","role":"assistant"}

{"content":".","role":"assistant"}

{"content":"Paris's","role":"assistant"}

{"content":" latitude","role":"assistant"}

{"content":" is about","role":"assistant"}

{"content":" 48","role":"assistant"}

{"content":".","role":"assistant"}

{"content":"856","role":"assistant"}

{"content":"6","role":"assistant"}

{"content":",","role":"assistant"}

{"content":" and","role":"assistant"}

{"content":" the","role":"assistant"}

{"content":" longitude","role":"assistant"}

{"content":" is","role":"assistant"}

{"content":" 2","role":"assistant"}

{"content":".","role":"assistant"}

{"content":"352","role":"assistant"}

{"content":"2","role":"assistant"}

{"content":".","role":"assistant"}

{"content":"Let me","role":"assistant"}

{"content":" check","role":"assistant"}

{"content":" today's","role":"assistant"}

{"content":" weather","role":"assistant"}

{"content":" in Paris","role":"assistant"}

{"content":".","role":"assistant"}

{"content":"","role":"assistant","tool_calls":[{"index":0,"id":"get_weather:0","function":{"arguments":"","name":"get_weather"},"type":"function"}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"{\""}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"latitude"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"\":"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":" "}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"48"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"."}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"856"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"6"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":","}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":" \""}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"longitude"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"\":"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":" "}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"2"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"."}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"352"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"2"}}]}

{"content":"","role":"assistant","tool_calls":[{"index":0,"function":{"arguments":"}"}}]}

{"content":"","role":"assistant"}When the model calls one or more tools, an event with a non-empty tool_calls.type will be output for each tool call:

{"content":"","role":"assistant","tool_calls":[{"index":0,"id":"get_weather:0","function":{"arguments":"","name":"get_weather"},"type":"function"}]}Below is a snippet showing how to aggregate deltas into the final tool_call object.

Accumulate tool_call content

final_tool_calls = {}

for event in stream:

delta = event.choices[0].delta

if delta.tool_calls and len(delta.tool_calls) > 0:

tool_call = delta.tool_calls[0]

if (tool_call.type == "function"):

final_tool_calls[tool_call.index] = tool_call

else:

final_tool_calls[tool_call.index].function.arguments += tool_call.function.arguments

print("Final tool calls:")

for index, tool_call in final_tool_calls.items():

print(f"Tool Call {index}:")

print(tool_call.model_dump_json(indent=2))const finalToolCalls: OpenAI.Chat.Completions.ChatCompletionMessageFunctionToolCall[] = [];

for await (const event of stream) {

const delta = event.choices[0].delta;

if (delta.tool_calls && delta.tool_calls.length > 0) {

const toolCall = delta.tool_calls[0] as OpenAI.Chat.Completions.ChatCompletionMessageFunctionToolCall & {index: number};

if (toolCall.type === "function") {

finalToolCalls[toolCall.index] = toolCall;

} else {

finalToolCalls[toolCall.index].function.arguments += toolCall.function.arguments;

}

}

}

console.log("Final tool calls:");

console.log(JSON.stringify(finalToolCalls, null, 2));Accumulated final_tool_calls[0]

{

"index": 0,

"id": "get_weather:0",

"function": {

"arguments": "{\"location\": \"Paris, France\"}",

"name": "get_weather"

},

"type": "function"

}